As we gear up for Genie’s upcoming agentic release, our UX and product teams have been rethinking what intuitive, AI-powered design really means. What happens when software understands intent, acts autonomously, and lets users steer rather than micromanage? In this post, Rosie, our Lead Product Designer, explores how agentic principles are transforming interface design . Read on to find out more about how the user experience of Genie has evolved.

How Agentic AI Will Reshape User Interfaces

User interfaces were invented because computers couldn’t understand us. That is changing.

Large‑language‑model agents now understand natural language, remember context, and can operate software for us. The classic user interface, pages of buttons and menus, will fade into the background into something that captures intent, shows progress, and lets humans steer. So what does that mean for how we will interact with software?

1. Chat is the new command line

Natural language will become the primary input. Instead of interacting with buttons, toolbars and screens, users will be able to ask: “Make section 3 align with the new privacy policy and tighten third‑party security.” It’s much easier to provide lots of context to an AI by dictating than it is by typing. It's likely that people will start to prompt with their voice instead of typing.

2. The work moves behind the curtain

Rather than micromanaging spreadsheets, videos, code or contracts, users describe outcomes; the agent manipulates the thing you’re working on behind the scenes. Humans step in to edit for themselves only in ambiguous or high‑risk cases.

3. Multiple Bots, One Chat

Specialist AI agents, for example ones for Research, Editing, Planning or Communication, will work together in the same chat. Each one explains its actions right there in the thread, so you never have to jump to another panel or screen.

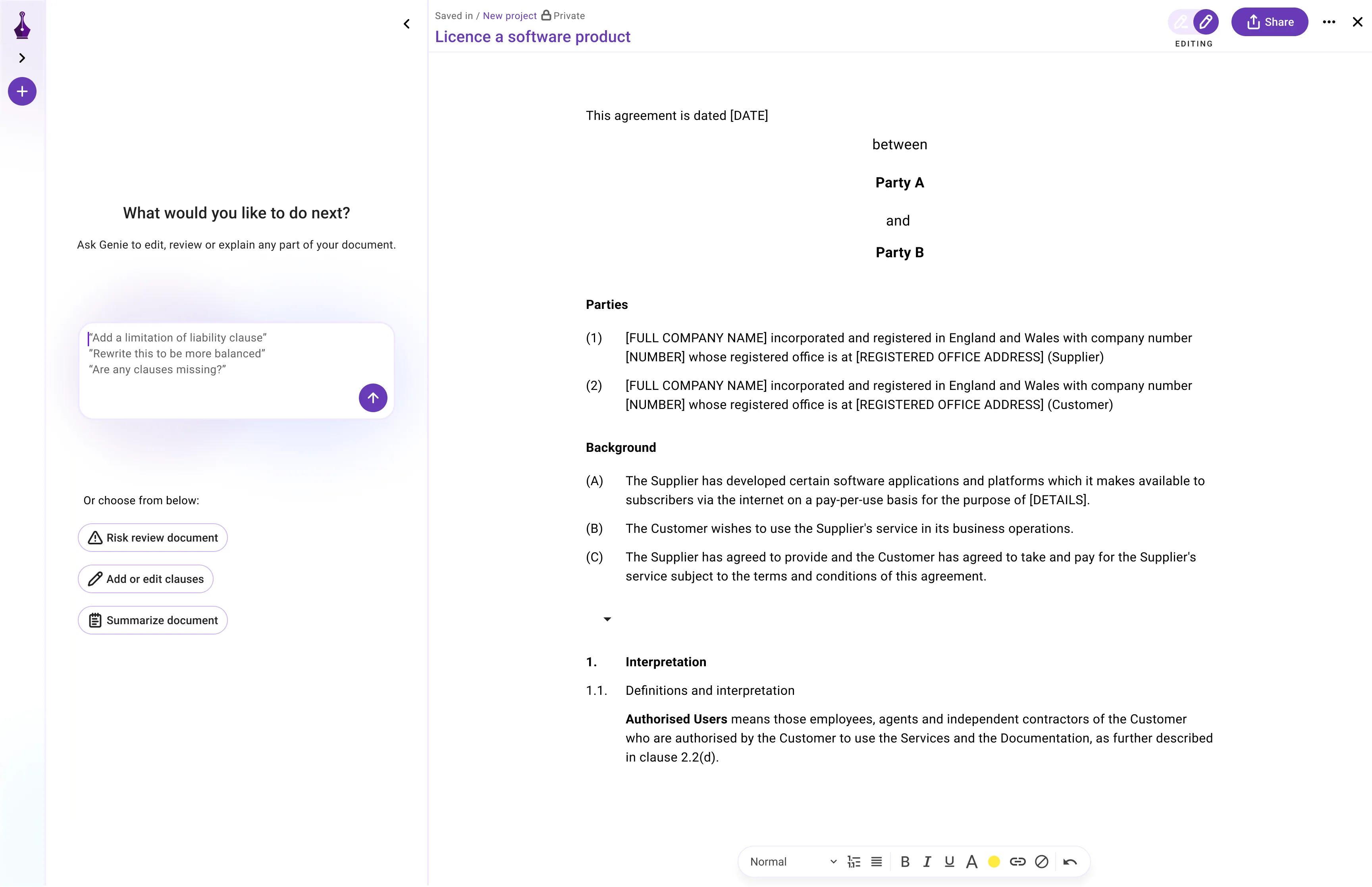

4. Two-panel UI paradigm

A new template is emerging for structuring user interfaces:

Left-hand side:

Chat with agents, citations & thinking

Users can accept / reject or tweak agent output directly in context.

Right-hand side:

Live preview of the generated artefact

Top:

Project information & status

5. Interfaces that vanish

When systems can infer intent from preferences, history and actions, many flows become automatic, or zero‑touch. Components appear where your eyes rest, then melt away.

Upload a file ➝ Message shows “Working…” ➝ Summary opens with key insights

Food ordering shrinks from ten taps to one. Screens aren’t dead, they just appear only when needed for confirmation or transparency. Apps will start to feel lighter and less cluttered. Instead of clicking through lots of menus, the right tools will simply show up when you need them and then disappear again when you don’t.

6. Interfaces with a Touch of Magic

The parts of the interface that do stick around are starting to feel a little magical. They don’t just sit there. They move, adapt, and respond to you, almost like they know what you’re looking for before you do.

Think of it like this: helpful suggestions pop up right when they’re useful (not too early, not too late). Buttons and panels appear smoothly, only when needed. Unnecessary stuff stays out of the way, so you can focus. And sometimes, there’s even a little spark that makes using the app feel fun and rewarding. It’s the kind of magic that makes tech feel effortless.

Implications for Legal Tech Solutions

AI agents won’t just streamline individual tasks; they’re reshaping how people involved in legal work, prevent, find act on risks.

These new tools democratise legal work, empowering business teams to take on more responsibilities. They will also support users to focus less on the details, and more on higher‑value judgment and strategy.

Intent over interfaces

Users (both lawyers and non-lawyers) can describe the outcome they need (Eg. “Increase protections on our intellectual property”); then the agent decides whether that means redrafting a clause, inserting appendices, or adding a side letter.

Decision dashboards, not a maze of files

Risk scores, open issues, and deal blockers become the primary things to monitor, replacing combing through dozens of documents.

Specialist agents automate legal tasks

Specialist AI agents can take on different legal workflows, while the human remains the decision‑maker. For example, dedicated agents might monitor new legislation and flag non‑compliant clauses, or suggest fallback language during negotiations.

All of this can be triggered automatically by the agent and can happen in a single chat thread. Each agent appears only when its expertise is relevant, explains why it acted, and offers Apply / Tweak / Ignore buttons — no extra panels required.

Legal work shifts from manual redlining to agent‑mediated risk management, with UI surfacing decisions rather than every word.

In sum

User interfaces are shrinking. Because computers now better understand your intent and your context, they handle the details for you and bring the user back only for sensitive or high‑risk decisions. In legal, that makes contract red‑lining a brief “confirm or tweak” moment instead of hours of manual edits.

We don’t know exactly how user interfaces will look in five or ten years, but they are likely to be incredibly different from the ones we know today.

.png)

.png)

.png)